How to Detect AI Content Like an Expert

August 22, 2025

Spotting AI-generated text is part art, part science. It’s about developing an eye for the subtle giveaways—the things that seem too perfect—and then backing up your hunch with the right tools. You’re looking for content that feels a little hollow, lacks a distinct personality, repeats itself, or steers clear of any real, personal stories.

The Hidden Rise of AI-Generated Content

Let's be honest, AI-generated content is absolutely everywhere. It’s in our inboxes, on our favorite blogs, and even in academic papers. This explosion is thanks to a new wave of advanced AI copywriting tools that can churn out text with staggering efficiency.

But what makes it so tricky to spot? The AI models are evolving at a breakneck pace, producing text that’s often hard to tell apart from something a person wrote.

This has sparked a constant cat-and-mouse game. For creators, editors, and educators, telling human from machine isn't just a fun party trick—it's become a critical part of the job. It's essential for:

- Academic Integrity: Making sure students are submitting their own work.

- Content Authenticity: Protecting a brand’s unique voice and earning reader trust.

- Information Reliability: Stopping the spread of AI-generated "facts" that sound convincing but are completely wrong.

Why Detection Is Getting Harder

The challenge isn't just that AI models are getting smarter. It's that the global AI landscape is maturing, and all the top models are starting to sound alike. As different systems are developed around the world, they’re all getting incredibly good, which makes their writing less unique and harder to trace back to a specific generator.

Take the performance gap between leading U.S. and Chinese AI models, for example. On key benchmarks, that gap shrank from over 17 percentage points to less than 5 percentage points in just one year. This reflects a worldwide trend: AI systems are becoming more powerful and more similar. You can dig into the full report on these maturing AI ecosystem trends if you're curious.

What this means is there’s no single "AI writing style" anymore. The newest models can mimic different tones, styles, and even sprinkle in subtle imperfections to throw us off the scent. The old tricks just don't work as well.

This new reality means we have to get smarter, too. Just looking for robotic phrasing isn’t going to cut it. We need to pair our own intuition with the analytical power of technology to stay ahead of the curve. Understanding this context is the first step, and this guide will walk you through exactly how to do it—from simple manual checks to using the best tools for the job.

Spotting AI Without Any Tools

Before you even think about pasting text into a specialized program, remember that your own critical eye is your most powerful tool. Learning how to detect AI by hand starts with trusting your gut and looking for the subtle clues that machines almost always leave behind.

AI-generated text often has this odd, almost too-perfect quality. It can feel hollow and unnatural. Think of it like a digital uncanny valley—the grammar might be flawless and the information logically structured, but it’s missing a distinctly human touch. That feeling is your first and most important clue.

Look for an Unnatural Voice and Tone

Human writing is full of quirks. We use unique phrasing, inject personal opinions, and sometimes we bend the rules for effect. AI models, trained on mountains of data, tend to smooth out all those interesting edges, leaving you with a voice that’s correct but completely bland.

For example, an AI might describe a tough project as "a challenging endeavor that required significant effort." A real person is far more likely to say something like, "It was a nightmare, but we finally got it done." See the difference? One lacks personality and lived experience.

Here’s what to keep an eye out for:

- Overly Formal Language: AI often defaults to a stuffy, encyclopedic tone, even for casual topics. It just doesn't know how to relax.

- Lack of Nuance: You won’t find many strong, controversial, or deeply personal takes. The text almost always plays it safe, avoiding any real point of view.

- Consistent Perfection: Flawless spelling, grammar, and sentence structure across thousands of words is a major red flag. Real people make typos.

When you read a piece of content, just ask yourself: does this sound like a person talking, or does it sound like a textbook? The answer is often incredibly revealing.

Check for Repetitive Patterns

Another huge tell is repetition. AI models can get stuck in a loop, using the exact same sentence structures or transition words over and over again. You might notice every other paragraph kicks off with "Additionally," "Furthermore," or "In addition." It gets old, fast.

This isn't just about word choice; it's about the very structure of the writing. An AI might create a list where every bullet point is formatted identically: "Noun + verb + descriptive clause." While it’s technically correct, that rhythmic, predictable pattern feels robotic and lacks the natural variation of human thought.

A key giveaway is the absence of personal anecdotes or real-world stories. AI can't share a memory or a genuine feeling from a past experience. If a text discusses a topic without offering a single personal story or unique insight, it’s worth a second look.

Spotting Factual Inconsistencies

AI models are incredibly confident, even when they are completely, utterly wrong. This leads to a phenomenon known as "hallucination," where the AI just makes stuff up—plausible-sounding facts, stats, or sources that are entirely fabricated.

The best way to catch this is to focus on very specific claims. If the text mentions a statistic like "87% of marketers agree," do a quick search. If you can't find a credible source to back that number up, there's a good chance an AI pulled it out of thin air.

This is especially common with:

- Dates and Historical Events: The AI might get timelines mixed up or mash details together from different events.

- Technical Specifications: It can invent product features or misstate scientific data with unearned confidence.

- Quotes: AI often attributes made-up quotes to real people. It sounds good, but it's pure fiction.

Honing your ability to spot these manual clues is the foundation of good AI detection. It helps you build a strong hypothesis before you even touch a tool, making your entire verification process sharper and more efficient.

Using AI Detection Tools the Smart Way

When your gut tells you something is off but you can’t quite put your finger on it, AI detection tools can give you a data-backed second opinion. But using them effectively is more than just copying and pasting text.

The secret is to think of these tools not as a final verdict, but as another piece of evidence in your investigation. They don’t just spit out a simple "yes" or "no." Instead, they analyze the text for specific linguistic patterns and give you a probability score. Knowing what those scores actually mean is the key to figuring out how to detect AI accurately.

What AI Detectors Are Actually Looking For

Most detection tools are trained to spot patterns that are statistically unlikely in human writing but common for large language models. They’re basically digital bloodhounds sniffing out tells.

Two of the big metrics they often look at are:

- Perplexity: This is a fancy way of measuring how predictable a string of words is. Human writing tends to be all over the place with varied vocabulary and sentence structures, giving it high perplexity. AI-generated text, built for coherence, often follows very predictable word patterns, resulting in low perplexity.

- Burstiness: This refers to the rhythm and variation in sentence length. Humans write in bursts—a long, winding sentence might be followed by a few short, punchy ones. AI models, on the other hand, tend to produce sentences of a much more uniform length and structure, leading to low burstiness.

So, a high "AI-generated" score usually means the text has low perplexity and low burstiness. In other words, it’s just a bit too perfect, too uniform, and too predictable to feel authentically human.

Think of a detection score like a weather forecast. A 95% chance of AI doesn’t guarantee it was machine-written, just like a 95% chance of rain doesn't guarantee you'll get soaked. It's a strong indicator, but you still need to look out the window and use your own judgment.

Building a Reliable Detection Workflow

One of the biggest mistakes people make is relying on a single tool. Every detector uses a slightly different algorithm and training data, which means their results can vary—sometimes wildly. A much smarter approach is to cross-reference your findings to build a stronger case.

First, get a consensus from multiple tools. Run the text through at least two or three different detectors. If one tool flags it as 90% AI while another says it’s only 30%, that’s your cue to lean more heavily on your own manual review. When several tools agree, you can have much more confidence in the result.

Next, look at what the tools highlight. Many detectors will highlight specific sentences or phrases that scream "AI." Pay close attention to these sections. Do they line up with the manual red flags you spotted earlier, like a missing personal voice or strangely repetitive phrasing? This is where the data meets your intuition.

Finally, always combine the tech with your own analysis. A detector's score should support your judgment, not replace it. If a tool flags a piece that’s filled with genuine personal anecdotes and a unique voice, the tool is probably wrong. And if a text gets a passing grade but still feels hollow and robotic to you, trust your gut.

This integrated approach helps you avoid false positives and make a much more informed decision. As you get more experienced, you'll find the right balance between trusting the data and your own critical thinking. And for those looking to fix AI-generated content after it's been detected, understanding what an AI detector and rewriter can do is a great next step toward creating more authentic text.

Comparing Popular AI Detection Tools

With so many tools on the market, choosing the right one can feel overwhelming. Each has its strengths, whether it's raw accuracy, a user-friendly interface, or the ability to check different content formats. This table breaks down a few of the leading options to help you decide.

| Tool Name | Best For | Key Feature | Pricing Model |

|---|---|---|---|

| Originality.ai | SEO and academic use | Scans for both AI and plagiarism | Subscription-based |

| Copyleaks | Enterprise and education | Multi-language support and LMS integration | Freemium with paid tiers |

| Winston AI | Educators and content teams | High accuracy with clear scoring reports | Freemium with paid tiers |

| GPTZero | General purpose detection | Highlights AI-like sentences for review | Freemium with premium plans |

Ultimately, the "best" tool depends on what you need it for. An educator might prefer Winston AI for its detailed reports, while an SEO agency might lean on Originality.ai for its dual plagiarism and AI-checking features. It’s always a good idea to test a few free versions to see which workflow you prefer.

Why AI Detectors Can Get It Wrong

AI detection tools can be a handy data point, but they are far from perfect. If you treat their scores as gospel, you’re setting yourself up for trouble—think false accusations and bad judgments. Understanding their limits is a critical part of learning how to detect AI without causing a mess.

These tools aren't magic. They're just pattern-recognition machines. If a piece of writing doesn't have the typical chaos of human expression—or looks a little too much like the predictable patterns of an AI—it gets flagged. And that leads to a whole lot of false positives.

When Human Writing Looks Like AI

Some human writing styles are just magnets for being misidentified as AI-generated. The algorithms aren't all that smart and often get tripped up by nuance.

Here are a few common scenarios where a human writer might get flagged:

- Non-Native English Speakers: Someone who learned English as a second language might naturally use simpler vocabulary or more formulaic sentence structures. To an AI detector, this can look a lot like the low "perplexity" it's trained to associate with machine writing.

- Technical or Academic Writing: Content that’s dense, formal, and structured for pure clarity often lacks the "burstiness" of creative prose. A scientific paper or technical manual written by a human expert? It can easily trigger a false positive.

- Following Strict Guidelines: When a writer has to stick to a super-rigid content brief or template, their creativity is boxed in. The end result can feel uniform and less varied, which is another red flag for detectors.

Relying solely on a detector's score without considering who wrote the text and why is a huge mistake. These tools should be a guide, not a judge and jury.

How AI Can Trick the Detectors

Just as human writing can be misread, AI-generated text can be tweaked to fly right under the radar. It's a constant cat-and-mouse game between AI generation and detection, and a few simple tricks can fool many of the tools out there today.

One of the most common methods is simply blending human and AI text. A writer might use AI to create a rough draft or an outline, then go back and heavily edit it—injecting their own voice, stories, and unique phrasing. The final product is a hybrid that most detectors just can't classify accurately.

Even basic paraphrasing works surprisingly well. Just running AI text through a separate paraphrasing tool—or even another AI model with instructions to rewrite it—can change the sentence structure and word choice enough to dodge detection. For a deeper dive, our guide explores in detail why AI detectors are not always reliable.

Accuracy is still a massive issue. One analysis of AI-generated medical literature found that detection tools were only right about 63% of the time, with a false positive rate floating around 25%. The moment that AI text was paraphrased by another AI, detection accuracy cratered by nearly 55%. It just goes to show how fragile these systems are. You can read more about these AI detector accuracy findings.

At the end of the day, human oversight is the one thing you can't replace. Use the tools, look at the scores, but always let your own critical judgment have the final say.

Putting It All Together: A Framework for Confident AI Detection

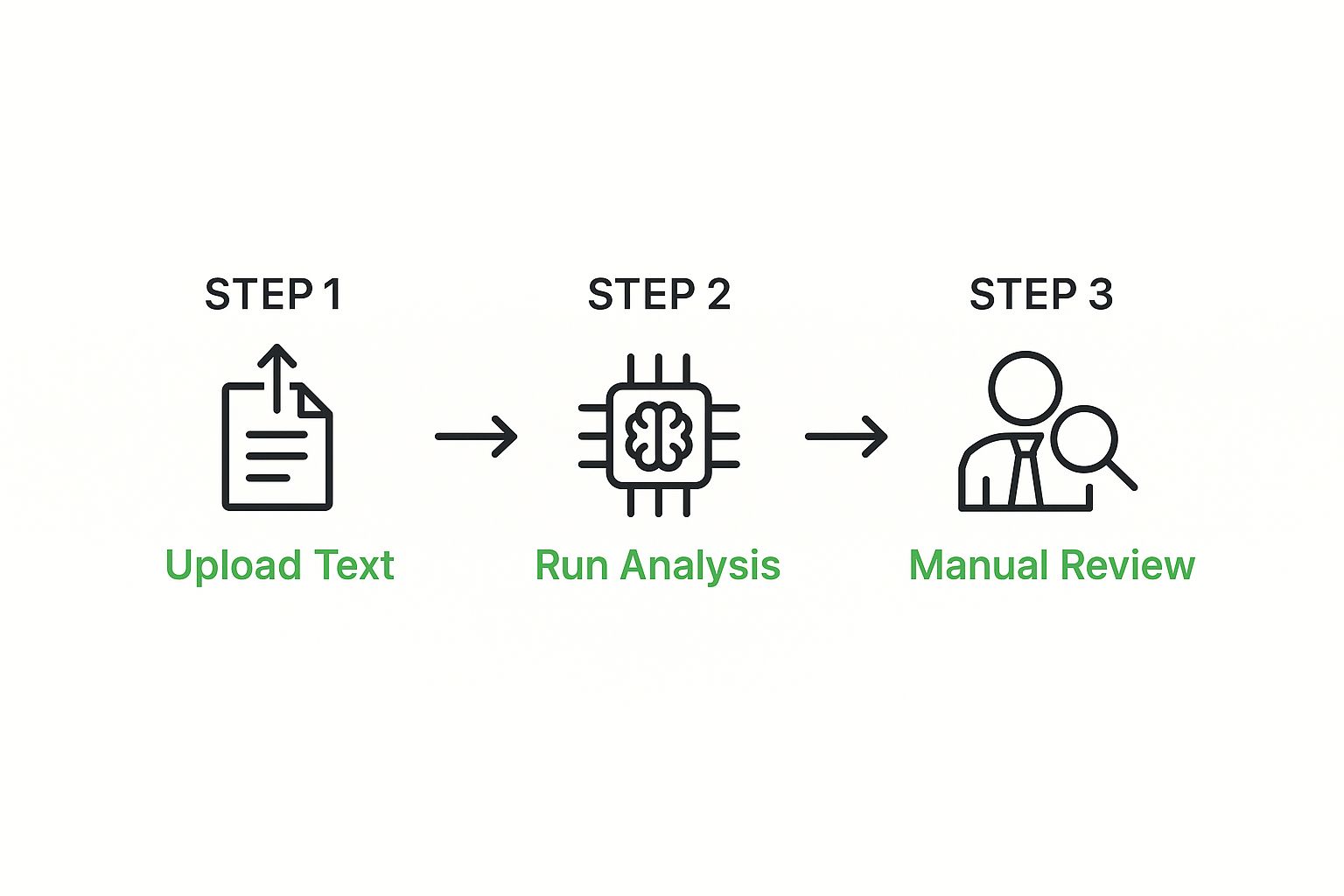

The most reliable way to spot AI-generated content isn’t about picking sides between human intuition and automated tools. It’s about combining them into a single, powerful workflow. Think of it as using your own expertise to form an opinion, then backing it up with data. This multi-layered approach is the key to making a final call you can feel confident about.

This isn’t just about getting a simple "yes" or "no" answer. It starts with your own critical eye, looking for those tell-tale signs. Then, you bring in the tools for a quantitative check—ideally, more than one. Finally, you step back in to weigh all the evidence and make an informed decision.

The infographic below breaks down this simple but incredibly effective three-part process.

As you can see, the tools are just a middle step. They aren't the final word. Your judgment is, and always should be, the most critical component of the whole process.

Building Your Blended Workflow

Let's see how this plays out in the real world. Imagine you're reviewing a blog post that just feels… off. The writing is clean, but it has no soul, no distinct personality. This is where your manual check, your first line of defense, comes in. Are the sentence starters all the same? Does it feel like it's missing personal stories or specific, verifiable details?

Once you have a hunch, it's time to bring in the tools. The trick here is to not put all your faith in a single detector. Instead, run the text through two or three different ones. This helps you build a consensus and protects you from the biases or blind spots of any one algorithm.

For example, your results might look something like this:

- Tool A flags it at 85% AI-generated.

- Tool B comes back with a higher 92% probability.

- Tool C gives it a more conservative 75%.

The exact numbers are different, but the trend is undeniable. All three tools are pointing strongly toward an AI origin. This data, combined with your initial gut feeling, starts to build a pretty compelling case. The key is knowing how to interpret this evidence without treating detector scores as gospel.

The truth is, detection tools aren't perfect. During one academic year, 68% of high school teachers used AI detection software. In that time, Turnitin flagged that 11% of 200 million papers contained significant AI content. The problem? Its own false positive rate can be as high as 4%. This really drives home why a blended, human-and-tool approach is the only way to get it right.

In the end, you are the final judge. The scores from the detectors are just data points, but your manual analysis provides the all-important context. You can compare the sentences the tools highlighted as "AI-like" with your own notes. If they match up with the lifeless tone and repetitive phrasing you spotted from the start, your confidence in the assessment grows.

This framework is more important than ever, especially as more writers learn how to bypass AI detection using increasingly sophisticated methods.

A Few Common Questions About AI Detection

If you're trying to figure out the world of AI detection, you're not alone. It brings up a lot of questions. Let's walk through some of the most common ones.

Can AI Detectors Ever Be 100% Accurate?

Nope. Not a single tool on the market today is 100% accurate. They all have their blind spots and make mistakes.

You’ll run into both false positives (where human writing gets flagged as AI) and false negatives (where AI content slips right past the goalie). Things like simple paraphrasing, newer AI models, or even just mixing a little human writing with AI-generated text can throw them off completely.

The best approach is to treat a detector's score as a strong hint, not as the final word. It's a starting point for your own investigation, where your human judgment makes the final call.

So What’s the Best Free AI Detection Tool Out There?

Honestly, there isn’t one single “best” free tool. Their performance really depends on what you're checking. A tool that’s great at spotting AI in academic papers might completely miss it in a snappy marketing email.

The smartest move is to stop looking for a single magic bullet. Instead, find two or three free tools you trust and build a small toolkit.

Run your text through all of them. If you get a consensus—say, all three flag the text—you can feel much more confident in the result. It’s a far more reliable method than putting all your faith in one score from one program.

Is It Unethical to Use AI to Generate Content?

This is where it gets complicated. The ethics of using AI really come down to context and, most importantly, transparency. There's no universal rulebook, but some clear lines are starting to be drawn.

In school, for example, passing off AI work as your own is a huge no-no. It's widely considered academic dishonesty.

In the professional world, especially in fields like journalism or research, not telling your audience you used AI can destroy your credibility. It misleads people and breaks trust. While it might not always be illegal, pretending machine-generated text came from a human mind is a pretty shady move.

The real ethical test is transparency. Using AI as a writing assistant to help you work faster? That’s one thing. Passing its work off as your own original thought? That’s crossing a line.

Ready to make sure your writing truly connects? Natural Write turns robotic AI text into authentic, engaging content that reads like it was written by a person, for a person. Give it a try for free at https://naturalwrite.com.