How Does AI Checker Work A Practical Explainer

October 23, 2025

An AI checker is basically a digital detective, trained to spot the subtle fingerprints that AI writing models leave behind.

It’s not looking for physical evidence, of course. Instead, it analyzes text for statistical anomalies and linguistic patterns that are often invisible to the human eye. Based on what it finds, it gives you a probability score of whether the content was machine-generated.

How AI Checkers Act Like Digital Detectives

At its heart, an AI checker is a specialized classifier model. It’s been trained on a massive library filled with millions of examples of both human-written and AI-generated text. This is how it learns to recognize the tell-tale signs of automated writing.

Think of it like a seasoned music critic who can instantly tell the difference between a live performance and a heavily produced studio track. The critic picks up on tiny variations in timing, complexity, and rhythm that most of us would miss. An AI checker does something similar, identifying patterns that are statistically common in machine writing but pretty rare in how humans express themselves.

The Key Clues They Follow

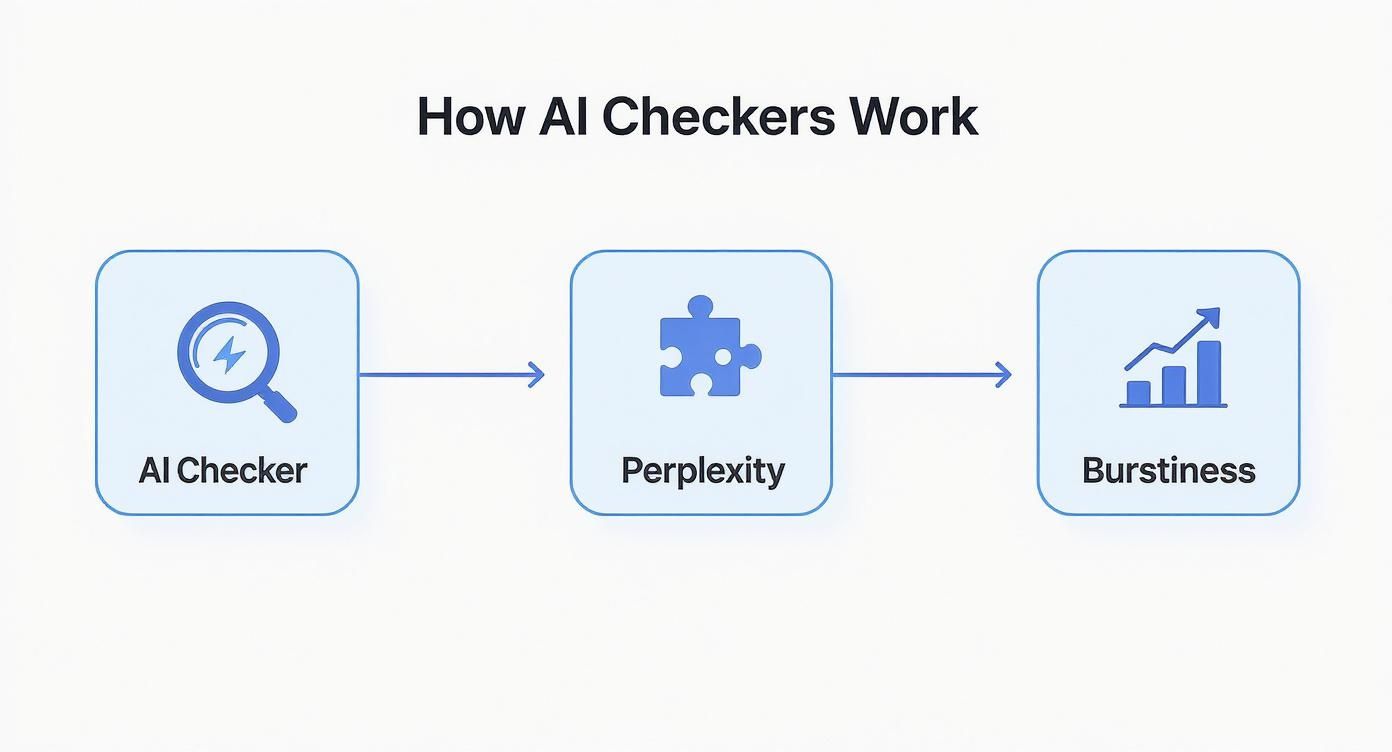

These digital detectives zero in on a few specific signals to make their assessment. The two most important clues are perplexity and burstiness.

Perplexity is just a fancy way of measuring how predictable a piece of text is. AI writing often leans on common, high-probability words, which makes it less complex and easier to predict.

Burstiness, on the other hand, is all about the natural ebb and flow of human writing—the mix of long, complex sentences with short, punchy ones. AI-generated text often lacks this dynamic rhythm, leading to a more uniform and monotonous feel.

By analyzing these and other linguistic features, an AI checker calculates a score representing the likelihood of AI authorship. This score isn’t a final verdict, but a statistical probability based on the patterns it uncovers.

To give you a clearer picture, here’s a quick breakdown of the core signals these tools are looking for.

Core Detection Signals at a Glance

This table summarizes the primary indicators AI checkers use to evaluate text, providing a quick reference for readers.

| Signal Name | What It Measures | Why It Matters for Detection |

|---|---|---|

| Perplexity | The complexity and unpredictability of word choices. | Low perplexity (predictable text) is a strong indicator of AI generation. |

| Burstiness | The variation in sentence length and structure. | Humans write with natural rhythm; AI often produces uniform sentences, resulting in low burstiness. |

| Linguistic Patterns | Repetitive phrasing, clichés, and overly formal tone. | AI models tend to overuse certain phrases and structures they learned during training. |

| Watermarking | Invisible signals embedded in the text by the AI model. | Some newer models are designed to include a hidden "watermark" to identify their output. |

Understanding these signals is the first step in learning how to detect AI and making sense of the results you get from these tools.

A Quick Look at How We Got Here

The idea of using tech to evaluate text isn’t exactly new. The groundwork for today’s AI checkers was really laid back in the 1980s when spell checkers became a thing. Those early tools were the first step toward AI-assisted content evaluation, and they eventually evolved into the grammar and syntax checkers we use today.

And it’s not just about content detection. Other forms of advanced text analysis, like social media sentiment analysis, also act like digital detectives, sifting through language to find complex patterns.

This guide will walk you through each of these concepts, giving you a clear picture of what’s happening behind the scenes.

Understanding Perplexity and Burstiness

If you want to understand how an AI checker really works, you need to look at the two biggest clues it hunts for: perplexity and burstiness. These terms might sound a bit nerdy, but the ideas behind them are surprisingly simple. They’re the core signals that separate the natural rhythm of human writing from the steady, predictable hum of a machine.

This concept map breaks down how an AI checker uses these two signals in its analysis.

As you can see, it all comes down to evaluating perplexity and burstiness to figure out where the text came from.

What Is Perplexity in Text

Imagine you’re playing a word-guessing game. If a sentence starts with "The grass is...", you'd probably guess "green" in a heartbeat. It’s an easy one because the sentence is highly predictable.

Perplexity is just a way of measuring that predictability. A low perplexity score means the text is predictable, sticking to common words and phrases. AI models are masters of prediction—they’re trained on mountains of data to pick the most statistically likely word to come next. This creates smooth, logical sentences that are often a little… boring.

A text with low perplexity is a huge red flag for AI checkers. It suggests an algorithm built the content by choosing the safest, most obvious word at every turn, not a human making more creative or unexpected choices.

Human writing, on the other hand, is usually less predictable. We use metaphors, inside jokes, and weird vocabulary that an algorithm would never see coming. That creativity and unpredictability lead to a high perplexity score, which is a strong sign of a human writer.

The Rhythm of Writing Burstiness

Now, let's talk about rhythm. Think about how you talk to a friend. You might use a long, winding sentence to tell a story, then follow it up with a short, punchy question. Maybe another quick comment after that. This natural variation in sentence length and structure is what we call burstiness.

Human writing is full of burstiness. It flows with a dynamic rhythm, mixing complex thoughts with simple statements. This variation is what keeps you engaged and makes the text feel real. It's the literary version of a heartbeat—full of life and never perfectly uniform.

AI-generated content often has low burstiness. The sentences tend to be the same length and follow a similar structure, creating a monotonous, robotic pace. The grammar might be perfect, but it’s missing the natural ebb and flow of a real conversation.

- Human Writing (High Burstiness): A healthy mix of long, descriptive sentences and short, direct ones. The rhythm feels alive and keeps you reading.

- AI Writing (Low Burstiness): Sentences are often uniform in length and structure. The rhythm can feel flat and mechanical, almost like a metronome.

By looking at both perplexity and burstiness, an AI checker gets a detailed profile of the text. It's not just checking words; it's analyzing the statistical "shape" of the writing. When a piece of content is too predictable (low perplexity) and too uniform (low burstiness), the checker’s confidence that it’s looking at AI-generated text goes way up.

A Look Inside AI Checker Technology

So, what’s really going on under the hood of an AI detection tool? At its heart, an AI checker is a type of machine learning model called a classifier. Think of it as a sorting machine trained to do one thing really well: tell the difference between two categories.

In this case, those categories are "human-written" and "AI-generated." The machine learns to make this call by studying a massive library of text examples in a process we call training. This library is its training data, and it's the single most important ingredient for making an AI checker accurate and reliable.

The quality of that training data is everything. For a detector to work, its training data needs to be huge and incredibly diverse, pulling from millions of text samples across the internet, books, and academic papers. This is how it learns the subtle fingerprints that separate human creativity from machine logic.

The Role of Training Data

Imagine you’re training a bouncer to spot fake IDs. You wouldn't just show them one real one and one fake one. You’d give them hundreds of examples: IDs with slightly off colors, blurry photos, weird fonts, and even a few near-perfect forgeries, alongside a stack of legitimate ones.

That's exactly how an AI checker learns. Its training data includes:

- Human-Written Text: Everything from classic novels and scientific papers to blog posts, news articles, and casual social media comments.

- AI-Generated Text: Content produced by a whole range of AI models, from early versions of GPT to the latest and greatest language generators out there.

By comparing these two giant datasets, the classifier starts to recognize the statistical patterns that algorithms leave behind. It flags patterns in sentence structure, word choice, and overall rhythm that show up again and again in machine-generated content but are far less common in human writing. This whole process is a core part of natural language processing, a field focused on teaching computers to understand how people talk. If you want to go deeper, check out our guide on what is natural language processing.

The better the training data, the smarter the detector. A model trained on a small or outdated set of examples will have a hard time keeping up with newer, more advanced AI writers, which leads to less accurate results.

Analytical Approaches Models Use

Beyond just the training data, AI checkers use a few different analytical methods to make their decision. These tools aren't just reading the words; they're running a deep statistical analysis on the text’s DNA. Some models focus on linguistic features, like how complex the sentences are or the use of transitional phrases.

Others look at statistical properties, like how often certain words appear or how predictable the next word in a sentence is. This reliance on AI to evaluate content is part of a much bigger trend. Recent data shows that 78% of organizations used AI in the past year, a huge jump from 55% the year before. You can find more insights in this report on the rise of AI in business operations. That fast adoption is exactly why understanding how these checkers work is becoming so important for creators and businesses.

Why AI Checkers Sometimes Get It Wrong

No AI tool is perfect, and that’s especially true for AI checkers. For all their sophistication, they can—and do—make mistakes.

The most common error is a false positive, where the tool flags perfectly good human writing as AI-generated. It’s frustrating, sure, but understanding why it happens is the key to using these tools effectively.

At the end of the day, an AI checker’s verdict is just a probability, not a hard fact. The score it gives you is based on how closely your text matches the statistical patterns it was trained to see as machine-like. If your writing happens to line up with those patterns, you might get a high AI score even if you wrote every word yourself. It's a crucial part of understanding how an AI checker works in the real world.

When Human Writing Looks Like AI

So, what kind of human writing can fool an AI detector? Often, it’s content that’s naturally predictable or follows a tight, rigid structure. The very same qualities that make a text clear and formulaic can also make it look like it has low perplexity and burstiness.

Here are a few common scenarios where human content gets flagged:

- Technical or Academic Writing: Think manuals, scientific papers, and legal documents. They often rely on repetitive phrasing and a formal tone to be precise. That structured style can easily mimic the predictability of AI.

- Content from Non-Native Speakers: Someone writing in a second language might naturally use simpler sentence structures and more common vocabulary. While it might be perfectly correct, this can lower the text's complexity and "burstiness," triggering a false positive.

- Overly Simplified Prose: Writing that uses very short sentences and basic words—like content for young kids or total beginners—can lack the linguistic variety that detectors expect from a human writer.

The key takeaway is that an AI detection score is just a starting point. It's a statistical guess based on patterns, and it completely lacks the context to understand an author's intent, style, or background.

What to Do with the Results

If you get a high AI score on your own writing, don't panic. Instead of seeing it as a final judgment, treat it as a signal to take a second look.

Does the text sound a little robotic? Could the sentences use a bit more variety?

You can use that feedback to make your writing more engaging for a human reader. This is exactly where tools like Natural Write come in. It helps you tweak your text to sound more authentic, which in turn helps it get past detection systems. It bridges that gap between clear, structured writing and prose that sounds genuinely human.

How AI Humanizers Actually Improve Your Content

The conversation around AI content is changing. It's no longer just about spotting machine-generated text, but about refining it into something that actually connects with a human audience. This is where AI humanizers come in. They act as a bridge between a raw AI draft and polished, high-quality content.

Instead of just trying to "beat" detectors, the best humanizers focus on improving the writing itself. They go after the exact patterns that AI checkers are trained to find in the first place.

Creating a More Natural, Authentic Feel

An AI humanizer works by tweaking the text to increase its perplexity and burstiness. Think of it as a smart editor that knows precisely what makes writing sound human. It does more than just swap out a few words; it restructures sentences and varies the vocabulary to create a more dynamic, less predictable reading experience.

This process directly targets the main signals that AI detectors look for:

- Increasing Burstiness: The tool breaks up those uniform, monotonous sentence structures. It introduces a healthy mix of long, flowing sentences and short, punchy statements. This creates the natural rhythm you find in human writing.

- Boosting Perplexity: It replaces the common, predictable word choices that AI models love with more nuanced and interesting language. This makes the text less obvious and way more engaging.

By making these kinds of adjustments, the content doesn't just become undetectable—it becomes genuinely better. It’s less about trickery and more about producing content that delivers real value. You can see how this works in our detailed guide on the AI text humanizer from Natural Write.

The real goal of humanization isn't to fool a machine. It's to elevate AI-assisted content to a standard that connects with people, making it more readable, persuasive, and effective.

Meeting the Demands of Modern Content

This need for refinement is exploding right alongside AI's growth in content marketing. With the AI marketing industry currently valued at $47.32 billion and expected to jump to $107.5 billion by 2028, the demand for high-quality, AI-assisted content is only going up.

We’re already seeing it in the numbers. 71.7% of content marketers use AI for outlining, and 57.4% use it for drafting. As we lean more on these tools, the need to make sure the final output has that human touch becomes critical. You can discover more insights about AI marketing statistics on SEO.com to see just how deep these trends run.

Ultimately, understanding how an AI checker works gives you the roadmap to creating better content. By focusing on the same metrics they do—perplexity and burstiness—humanizers like Natural Write help you refine your drafts, ensuring your message isn't just delivered, but actually felt.

Focusing on Quality in an AI-Driven World

The back-and-forth between AI writers and detectors can feel like an endless game of cat and mouse. But trying to figure out how an AI checker works just to sneak past it misses the point entirely. The real win isn't about evasion; it's about creating genuinely valuable, high-quality content that actually serves your audience.

This isn't just a hunch—it's where the entire industry is headed. Search engines like Google are now obsessed with helpful content, making the line between human and well-edited AI writing far less important than the value you deliver. The focus has shifted from how something was written to how well it serves the reader.

AI as a Creative Partner

The smartest way to navigate this new reality is to see AI as a powerful assistant, not a replacement for your own insight. It's fantastic for banging out a first draft, summarizing dense information, or just getting you past that blinking cursor of writer's block. But it still can't replicate the strategic oversight, emotional intelligence, and unique perspective a human brings to the table.

True success in modern content creation is found in the blend of technological efficiency and a deep, authentic understanding of what your readers truly need and want.

Let's be clear: AI is only going to become more integrated into our workflows. As we dive deeper into maintaining content quality, it's impossible to ignore the growing role of artificial intelligence, which is now woven into countless AI Automation Services for both content and business operations.

This partnership model is the future. By combining the raw speed of AI with human creativity and strategy, you can produce content that isn't just efficient, but also deeply resonant and genuinely useful. That’s how you satisfy both the algorithms and the actual people you’re trying to reach.

A Few Common Questions About AI Checkers

Once you start digging into how AI checkers really work, a few practical questions always seem to pop up. Let's tackle some of the most common ones, from accuracy to how these tools fit into school and work.

Are AI Checkers 100% Accurate?

Nope. AI checkers are not 100% accurate, and it’s important to remember that. Their results are probabilities, not verdicts. They’re trained to spot the statistical footprints of AI writing—things like low perplexity and burstiness. But sometimes, human writing has those same footprints.

Think about a technical guide or an essay from a non-native English speaker. That kind of writing can easily get flagged by mistake. It’s best to treat a detection score as a guide for a closer look, not as a final judgment.

An AI detection score is a statistical guess, not a confirmation. Always apply your own judgment and consider the context of the writing before making a final decision.

Can AI Checkers Detect Humanized Content?

This is where it gets tricky. A good AI humanizer is built specifically to erase the signals that detectors are looking for. By mixing up sentence structures, swapping in more nuanced vocabulary, and improving the overall flow, they make AI-generated text look, statistically, like something a person wrote.

No tool is foolproof, but a solid humanizer can drop the detection score dramatically. Often, it can make the content completely indistinguishable from human writing. The goal isn’t just to be undetectable—it’s to create something that’s actually better and more engaging for the reader.

Is It Okay to Use AI Checkers for School or Work?

Absolutely. In fact, using an AI checker can be a really responsible move in both academic and professional settings. Many universities and companies already use them to maintain academic integrity or ensure their content feels original and high-quality.

By running your own work through a checker, you get to see what they see. It gives you a chance to polish your writing, make sure it meets the standards, and head off any potential issues before they come up.

Ready to ensure your content sounds authentically human? Use the integrated AI checker from Natural Write to flag robotic text, then polish it to perfection with our one-click humanizer. Try it for free at https://naturalwrite.com and create content that connects.