Is ZeroGPT Accurate and Can It Be Trusted?

February 1, 2026

So, is ZeroGPT accurate? The short answer is: it's complicated. While the company claims over 98% accuracy, my own experience and a heap of independent tests show its real-world performance is more in the 70-80% ballpark. And that number can swing wildly depending on what you're checking.

Let's dig into what that actually means.

Deconstructing ZeroGPT's Accuracy Claims

When you see a big accuracy number like "98%," it’s easy to be impressed. But that single stat hides a lot of nuance—the kind of nuance that really matters if you're a student worried about a false accusation or a teacher trying to verify an assignment.

The real test for a tool like ZeroGPT isn't just about catching AI text. It's just as much about not flagging human writing by mistake. This is where we need to talk about two critical ideas: false positives and false negatives.

The Problem of False Positives

A false positive is when the tool screams "AI!" at a piece of writing that was 100% written by a human. Frankly, this is the most dangerous kind of error.

Imagine being a student who poured hours into an essay, only to be flagged for cheating. Or a professional writer whose client rejects their work because a detection tool got it wrong. It’s a huge problem.

From what I've seen, ZeroGPT tends to produce more false positives with certain types of writing:

- Formal academic work: The very structure and precise language of a good essay can sometimes look like an AI pattern.

- Heavily edited text: When you polish your writing until it shines, you smooth out the "human" imperfections that these tools often look for.

- Non-native English writing: Common phrasing and sentence structures used by non-native speakers can sometimes confuse the algorithm.

A tool built to uphold integrity can, ironically, end up punishing people for writing clearly and carefully. Suddenly, the burden of proof is on you to prove your own work is authentic.

Understanding False Negatives

The flip side of the coin is the false negative. This is when AI-generated text gets a pass and is labeled as human-written. This is the detector's main job, and it’s a miss.

This usually happens when AI text has been cleverly edited or "humanized" to sound less robotic. While a false negative won't get an innocent person in trouble, it completely undermines the tool’s purpose for anyone trying to confirm originality.

At the end of the day, ZeroGPT's true accuracy isn't a single number. It lives in the messy middle, somewhere between the marketing claims and its real-world slip-ups.

ZeroGPT Accuracy A Realistic Overview

The table below breaks down ZeroGPT's practical accuracy across different content types, based on third-party studies that go beyond the official claims. It gives you a much better feel for where the tool shines and where it struggles.

| Content Type | Observed Accuracy | Common Issue |

|---|---|---|

| Raw AI Output (e.g., ChatGPT) | 85% - 95% | Generally high, but struggles with newer AI models. |

| "Humanized" or Paraphrased AI | 50% - 70% | Easily fooled by simple edits and rewriting tools. |

| Formal Academic Writing (Human) | 60% - 80% | Prone to false positives; mistakes structured writing for AI. |

| Creative or Informal Writing (Human) | 90% - 98% | Very good at recognizing human creativity and nuance. |

| Non-Native English Writing (Human) | 55% - 75% | High risk of false positives due to unfamiliar sentence patterns. |

As you can see, context is everything. While ZeroGPT is pretty good at spotting unedited AI text, its reliability plummets when dealing with edited content or certain styles of human writing. This is why you can't rely on its score alone.

How ZeroGPT Detects AI Writing

To get a real sense of ZeroGPT's accuracy, we need to pop the hood and see how it actually works. Think of it less like a reader and more like a digital forensic analyst, trained to spot the subtle fingerprints that AI language models tend to leave all over their work. The tool's core 'DeepAnalyse' technology isn't magic—it’s just a highly advanced form of pattern recognition.

ZeroGPT is essentially hunting for two specific linguistic giveaways: perplexity and burstiness. They might sound technical, but the ideas behind them are surprisingly straightforward.

The Role of Perplexity

Perplexity is just a fancy word for how predictable a piece of writing is. When humans write, our word choices can be creative, a little quirky, or sometimes even a bit messy. This makes our writing less predictable, giving it high perplexity.

AI models, however, are trained to play the odds. Their entire goal is to pick the next word that is most statistically likely to appear. This results in text that is often incredibly smooth, logical, and, frankly, predictable. It has low perplexity. ZeroGPT’s algorithm is built to notice this unnatural predictability, flagging text that feels a little too perfect.

When a text is so smooth that every word feels like the most obvious possible choice, ZeroGPT's alarm bells start ringing. It's a classic sign that a machine probably did the writing.

Analyzing Text Burstiness

Burstiness is all about the rhythm and flow of sentence structure. Listen to how people talk—we naturally mix short, punchy statements with longer, more detailed sentences. This creates a varied, dynamic rhythm, or what we call high burstiness.

AI-generated text often misses this completely. It tends to fall into a rut, producing sentences of a similar length and structure over and over again. This creates a monotonous, robotic flow (low burstiness), which is another huge red flag for a detector. ZeroGPT looks for this unnatural consistency that most human writers instinctively avoid.

If you're curious to learn more about these signals, it’s worth exploring what AI detectors look for during their analysis.

By combining its analysis of perplexity and burstiness, ZeroGPT builds its case. It’s not actually reading for meaning; it's scanning for mathematical patterns. And that’s exactly why it’s pretty good at catching raw AI output but can be tricked by text that a human has edited to reintroduce that messy, natural variety.

What Independent Studies Say About ZeroGPT

While ZeroGPT's own marketing understandably touts high accuracy, independent testing gives us a much more realistic picture of how it holds up in the wild. Tech reviewers and researchers have thrown everything at this tool, from student essays to marketing copy, and the results paint a clear picture: its effectiveness is not a single, fixed number. It changes—a lot—depending on what you ask it to analyze.

These studies generally agree that ZeroGPT is pretty good at spotting raw, untouched text spat out by models like ChatGPT. In these straightforward cases, its detection rates are often high because the AI’s writing style, with its predictable sentence structures and word choices, is easy to flag. The moment a human steps in to edit, however, things get murky.

Performance on Academic and Edited Content

One of the biggest headaches for ZeroGPT is academic writing. The formal, structured, and grammatically perfect nature of scholarly work can look a lot like the very patterns these detectors are trained to find. This leads to a genuinely worrying number of false positives, where a student's original work gets incorrectly flagged as AI-generated.

For example, one comprehensive review ran over 70 different samples through the tool and found its real-world accuracy was closer to 80%. Digging deeper, the results for human-written essays were particularly telling. It produced a clear false-positive rate of 25%, flagging 3 out of 12 original essays as ‘mostly AI’. If you include the more ambiguous ‘uncertain’ or ‘partly AI’ labels, that suspicion zone shot up to 58%. That means nearly six out of every ten human-written pieces triggered some kind of warning. You can find more details on how they tested this in the full ZeroGPT review.

This vulnerability isn't just limited to academic work. It applies to any text that's been edited or "humanized." When a writer takes a first draft from an AI and starts rephrasing sentences, adding their own stories, or tweaking the tone, those statistical fingerprints ZeroGPT looks for start to fade.

The more a human polishes an AI-generated draft, the less chance ZeroGPT has of figuring out where it came from. It's a classic cat-and-mouse game, and a few simple edits are often all it takes to fool the detector.

Key Takeaways from Third-Party Testing

Looking at the findings from multiple sources, a consistent pattern of strengths and weaknesses emerges. It's vital to understand these before you put too much trust in its results.

- Strong on Raw AI: It does its best work on completely unedited text straight from a large language model.

- Weak on Nuanced Human Writing: It often gets confused by formal academic writing and even text from non-native English speakers, leading to false accusations.

- Easily Bypassed: Even light to medium editing, like paraphrasing and rearranging sentences, can cause its accuracy to plummet.

- Inconsistent with Mixed Content: When a document is a mix of human and AI writing, its ability to pinpoint which parts are which is hit-or-miss.

Ultimately, the consensus among independent testers is that while ZeroGPT can be a decent first-pass tool, its accuracy simply isn't reliable enough for making high-stakes decisions. The risk of wrongly accusing a student or writer is a major ethical concern echoed across almost every evaluation. This really gets to the heart of a bigger question, which we explore in our guide on if AI detectors actually work.

The High Stakes of False Positives

When an AI detector gets it wrong, it’s rarely a harmless mistake. This is especially true with false positives—when perfectly human writing gets flagged as being generated by AI. The fallout isn't just a bad score on a screen; it can be deeply personal and damaging.

Think about it from a student's perspective. A false positive could trigger an accusation of academic dishonesty, putting their grades, scholarships, or even their spot at the university on the line. For a freelance writer, a wrongly flagged article means a client rejecting their work, a hit to their professional reputation, and lost income. Suddenly, the burden of proof is on you to prove your own words are, in fact, your own.

Relying on an imperfect tool creates a genuine risk for anyone whose work is being evaluated. An algorithm's error can have profound, real-world consequences for innocent individuals.

This isn't just a hypothetical problem. It's a well-documented weak spot in how tools like ZeroGPT actually work. Certain styles of human writing are far more likely to be misjudged, creating a pattern of predictable errors that you need to be aware of.

Where ZeroGPT Is Most Likely to Fail

So, what kind of writing trips up ZeroGPT the most? The tool seems to stumble when it encounters text that, while written by a person, happens to share some surface-level traits with AI output. Because its algorithm is trained to look for predictability and uniformity, it can get confused by human writing that is just very structured or grammatically perfect.

Here are a few common scenarios where I’ve seen false positives pop up:

- Non-Native English Speakers: Someone who learned English as a second language might naturally use sentence structures that are grammatically sound but less colloquial or varied. This can accidentally mimic the logical, but often less nuanced, style of an AI.

- Highly Edited or Formal Content: Think of academic papers, technical guides, or professionally edited articles. This type of writing is intentionally precise and consistent. That lack of "burstiness"—the natural ebb and flow of human expression—can trick the detector into seeing an AI fingerprint that isn't there.

- Blended Human-AI Text: What happens when you use AI for a rough draft or to brainstorm ideas, then heavily edit it yourself? You get a hybrid. ZeroGPT often has a hard time parsing this, sometimes just flagging the entire piece as AI-generated instead of recognizing the human touch.

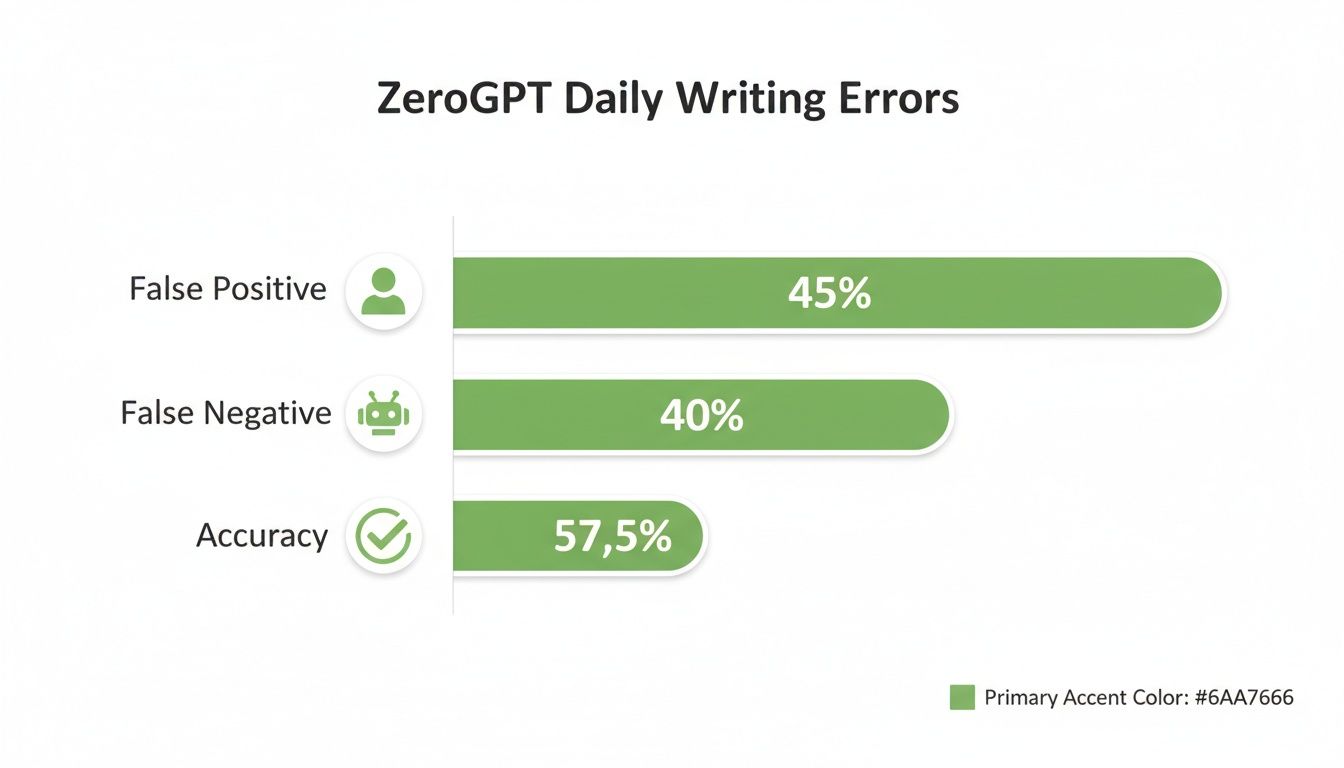

These weak points were thrown into sharp relief during a series of tests on everyday writing, like social media posts and blog content. In one analysis, ZeroGPT showed a staggering 45% false positive rate. That means it wrongly accused nearly half of the human-written samples of being AI. When you factor in the AI content it missed, its overall accuracy was a dismal 57.5%, revealing major reliability issues for common, real-world use. You can dig into the complete results of these daily writing tests on AffiliateBooster.com.

Knowing where these tools tend to fail is the first, and most important, step in protecting your work from being unfairly judged.

How ZeroGPT Compares to Other AI Detectors

ZeroGPT isn't operating in a vacuum. It’s just one of many tools trying to be the go-to AI detective, and when you put it up against competitors like GPTZero or Turnitin, you start to see the bigger picture: this is an industry-wide work in progress.

Frankly, no single tool is perfect. Some, like Turnitin, are woven into the fabric of academia and have a better track record with formal essays. Others are tuned to spot AI in creative or marketing copy. ZeroGPT seems to land somewhere in the middle. It’s a solid generalist tool—great for a quick first look, but it can lack the specialized nuance you might find elsewhere.

A Middle-of-the-Road Performance

When you look at independent tests, ZeroGPT consistently shows up as a decent, but not exceptional, performer. One third-party analysis of AI detectors looked at 120 different texts—everything from academic papers to job applications to daily blog posts.

The results? ZeroGPT’s overall accuracy came in at 67.5%.

What's really interesting is how that number breaks down. It scored 72.5% on academic and application-style writing but dropped to just 57.5% on more casual content like blogs and social media. This tells you something crucial: a detector’s effectiveness isn't a fixed number; it changes depending on what you ask it to analyze.

This chart really drives the point home, showing just how much ZeroGPT can struggle with everyday writing.

As you can see, the error rates for informal content are sky-high, with both false positives and false negatives being a major issue.

You should never treat a single AI detector as the final authority. The smartest way to use these tools is to cross-reference your results with a few different ones. This gives you a much more reliable and defensible conclusion.

At the end of the day, comparing these tools teaches us a vital lesson. The real question isn't "Which detector is the best?" but "How can I use these imperfect tools responsibly?" Running a check on one platform just isn't enough. You need a process to make sure your writing is solid enough to navigate all of them.

Making Your AI-Assisted Content Undetectable

Knowing the limits of AI detectors is just one piece of the puzzle. The real solution lies in actively "humanizing" the text you generate with AI. It's all about adding back the nuance, complexity, and even the slight imperfections that algorithms are trained to see as non-human.

The idea isn't to cheat a system. It's about taking ownership of the content and turning a generic AI draft into something that truly reflects your own voice and insight. With a few smart edits, you can use AI as a powerful starting point without the fear of your work being flagged by a flawed tool.

Refining Sentence Structure and Flow

One of the biggest giveaways of AI writing is its monotonous rhythm. AI models tend to write sentences that are all roughly the same length and structure, which feels robotic. Your first mission is to disrupt that predictability.

- Vary Your Sentence Length: Mix it up. Use short, punchy sentences for impact, then follow them with longer, more descriptive ones. This creates a natural ebb and flow that detectors recognize as human.

- Embrace Complexity: Don't be afraid to use clauses, conjunctions, and different types of punctuation. Building more intricate sentences breaks up the simple, subject-verb-object pattern that AI often falls into.

- Inject Your Voice: Make it sound like you. Ask a question. Add a brief, personal aside. Frame a point with a quick story. These small touches make the content feel far more conversational and authentic.

If you really want to get good at this, learning how to humanize AI text is the key to mastering these skills.

Enhancing Vocabulary and Word Choice

Vocabulary is another dead giveaway. AI models often reach for the most statistically common words, which can make the writing feel a bit sterile and overly formal. Humanizing the language means being more intentional and creative with your word choices.

Simply swapping out a few predictable words for more specific or evocative synonyms can dramatically shift the text from sounding machine-generated to thoughtfully crafted by a human.

This proactive approach puts you in the driver's seat. Instead of just reacting to a detector's score, you're creating content that is genuinely yours—and inherently undetectable—right from the beginning. For a deeper dive, our complete guide on https://naturalwrite.com/blog/how-to-make-ai-writing-undetectable explores even more advanced strategies. By focusing on these refinements, your final work will have no trouble with any detection scan.

Got Questions About ZeroGPT? Here Are Some Straight Answers

When you start using an AI detector, a bunch of questions pop up pretty quickly. Let's tackle some of the most common ones people ask when figuring out if ZeroGPT is the right tool for them.

Is the Paid Version of ZeroGPT Any More Accurate?

This is a big one. While paying for ZeroGPT gets you some nice perks like checking longer documents and uploading files in bulk, there's no solid evidence that its core detection engine is any better than the free one. The technology looking for AI patterns is the same across the board.

Think of the upgrade as buying a convenience pass, not an accuracy boost. The same old problems with false positives and negatives that we've talked about are just as likely to happen whether you're using the free or paid version.

What Do I Do If It Flags My Work as AI?

Getting a false positive—where your own, human-written work gets flagged as AI-generated—is maddening. The most important thing is not to panic. Instead, you need to be ready to prove your work is genuinely yours.

Here are a few things you can do right away:

- Show Your Work: Use something like Google Docs that keeps a version history. This lets you show exactly how your document came to life, from the first rough idea to the final polished piece.

- Share Your Research: Hand over your notes, outlines, and any sources you used. This trail of evidence proves you did the thinking and the work yourself.

- Get a Second Opinion: If ZeroGPT says your text is AI, run it through a couple of other detectors. If they all say "human," you have a strong case that the tools are unreliable and shouldn't be trusted as the final word.

Remember, a score from an AI detector isn't gospel. It's just one data point from a tool that's looking for patterns, and it often gets them wrong. Having proof of your writing process is your strongest defense.

Ultimately, knowing is ZeroGPT accurate is less about trusting its score and more about understanding its limits and being prepared for when it inevitably makes a mistake.